Decision trees can be used to solve relatively complex tasks.

A very powerful tool for tackling complex classification and regression problems can be produced by generating several decision trees (a decision tree forest) and calculating an ensemble average from the results of these trees. The correct term here is a random forest.

Where does the random component come into play and why do you even need such a component in a random forest?

Answering the last part of this question is relatively easy. A large number of decision trees (typically between 500 and 1,500) is required to obtain a meaningful ensemble average. The first step is to define the parameters of the problem:

- Select the features to be used as a basis for decision making (in the example with the golfer, these were the day of the week, weather and temperature)

- Determine the size of the decision tree (in the case of the golfer, the two results “on the golf course” and “not on the golf course” were expected)

- Establish the depth of the tree (this refers to the number of decision levels – 4 were used in the last example)

Growing 1,500 identical trees would of course lead to identical results, which would make a mockery of the idea of an ensemble average. The underlying idea of a random forest is to produce different trees due to random variations in the input data and the related decision criteria. To put it another way, you are trusting the wisdom of crowds.

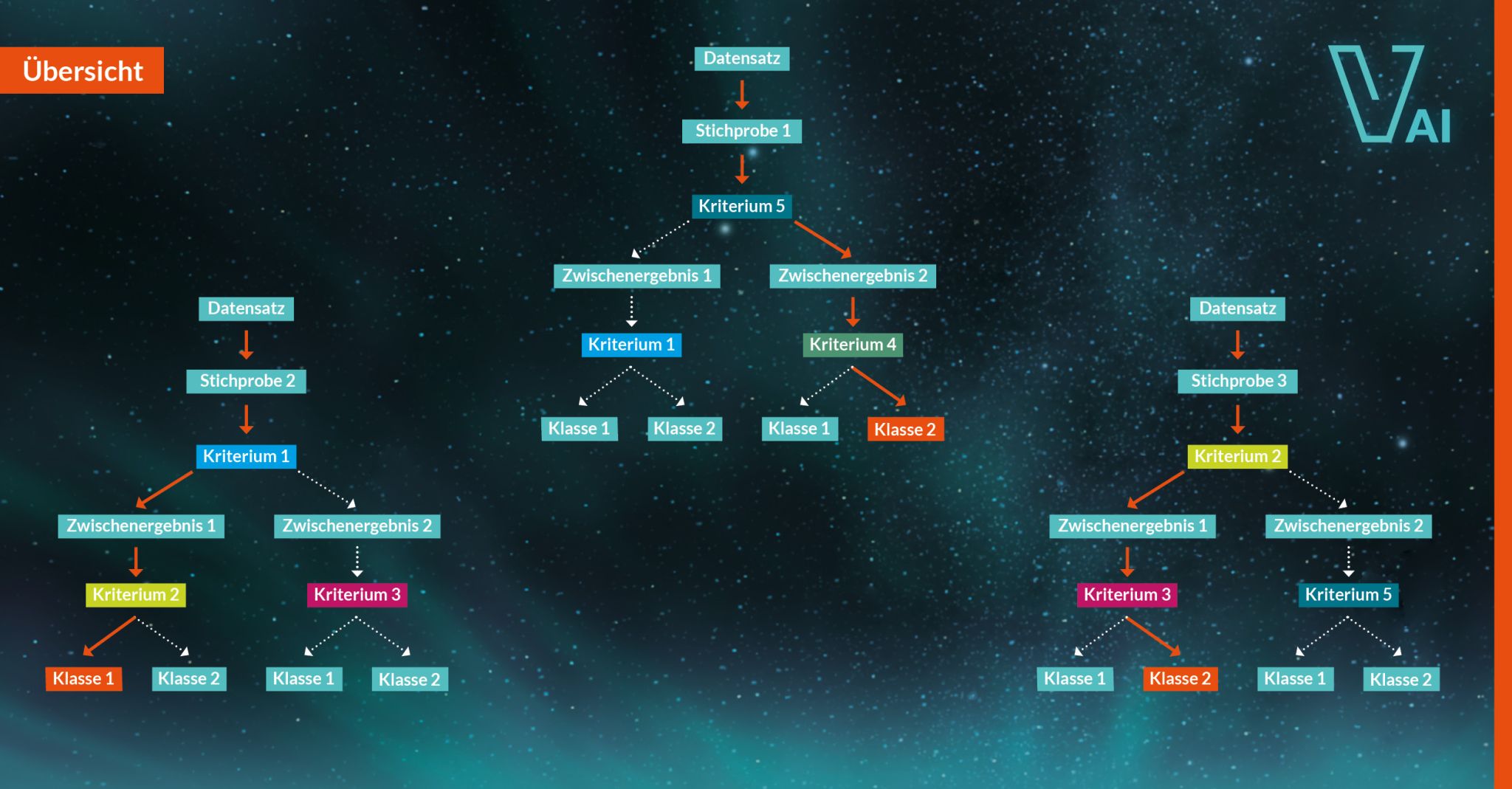

Assuming a sufficient volume of data, the data set to be processed is divided into a training data set and a test data set. Each individual tree is then generated and trained using the following model.

- A random sample is taken from the training data set.

- In addition, certain criteria (such as the day of the week or weather) are selected from the total list and randomly allocated to the decision levels of the tree.

- In the first round, random split criteria are assigned (is the day a Monday; is the weather sunny?).

- The random sample is analysed using the tree generated in this way and the result at this stage is compared with the known result during the training phase.

- The split criteria are adjusted on the basis of the error.

- The random sample is allocated to a class and put back; a new random sample is taken and the training starts again.

After all the trees have been trained, the entire random forest is evaluated using the test data set.